Introduction

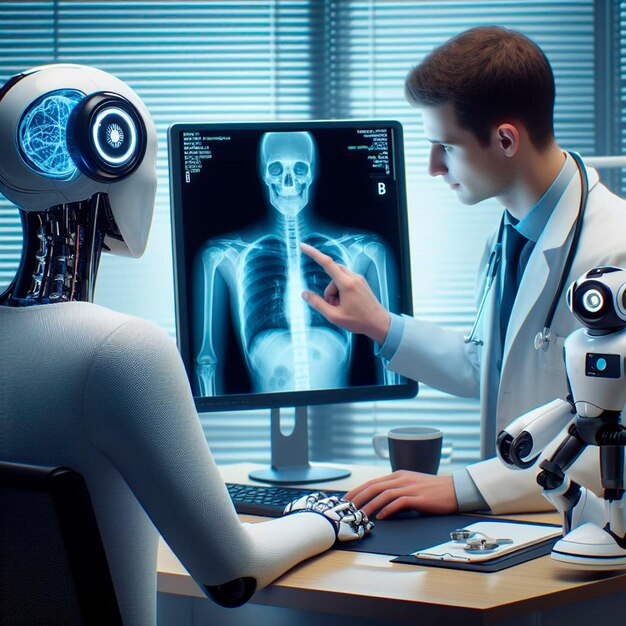

The integration of Artificial Intelligence (AI) in medicine has grown rapidly, with applications ranging from diagnostics to robotic surgery and administrative support. This advancement has prompted an important question: Will AI replace medical doctors? While AI demonstrates remarkable potential, its capabilities must be examined critically. This discussion evaluates both the positive prospects and the limitations of AI in replacing human medical professionals.

Positive Aspects: The Case for AI Integration

1. Enhanced Diagnostic Accuracy

AI systems, especially those utilizing deep learning, have demonstrated accuracy comparable to — and in some cases exceeding — that of physicians in diagnostic tasks. For example, AI models have shown high precision in detecting cancers in medical imaging (Esteva et al., 2017). These tools can process vast datasets, identify patterns invisible to the human eye, and learn continuously.

2. Efficiency and Accessibility

AI can dramatically reduce the time required for diagnoses and administrative tasks. Chatbots and virtual assistants can triage patients, manage appointments, and offer medical advice, potentially alleviating the burden on overworked healthcare systems, particularly in under-resourced regions.

3. Reduction of Human Error

Fatigue, bias, and emotional involvement may affect physicians' decisions. AI systems are immune to such influences, providing consistent and objective evaluations. This could lead to improved patient safety and outcomes.

4. 24/7 Availability

Unlike human doctors, AI systems do not require rest and can offer round-the-clock service. In emergency settings or rural areas with limited healthcare providers, AI can act as a frontline decision-making tool or support system.

Negative Aspects: The Case Against Full Replacement

1. Lack of Empathy and Human Touch

Medical practice is not purely technical; it involves empathy, communication, and trust — aspects central to the doctor-patient relationship. AI lacks consciousness and emotional intelligence, making it unsuitable for delivering bad news, counseling, or understanding nuanced psychosocial contexts.

2. Ethical and Legal Concerns

Responsibility and accountability in case of AI error remain ambiguous. Who is liable — the developer, the healthcare institution, or the AI system itself? Ethical dilemmas also arise regarding patient data privacy and algorithmic bias, which can exacerbate existing healthcare disparities.

3. Limitations in Complex Clinical Judgments

AI performs best in narrowly defined tasks but struggles with complex, multi-factorial decisions that require contextual understanding. Conditions with ambiguous symptoms, comorbidities, or requiring judgment based on incomplete data still necessitate human expertise.

4. Risk of Overdependence and De-skilling

Over-reliance on AI could lead to the erosion of physicians’ diagnostic skills over time. There is also a concern that the next generation of doctors may become less proficient if trained in environments heavily reliant on AI assistance.

Conclusion: Augmentation, Not Replacement

While AI will undoubtedly transform medicine, it is unlikely to completely replace doctors in the foreseeable future. Rather, a collaborative model, where AI augments human expertise, offers the most promising path forward. This synergy could optimize care delivery, improve outcomes, and preserve the essential human elements of medicine. Future policy and research must focus on ethical integration, upskilling of professionals, and maintaining the core values of medical practice.

Comments

Post a Comment